AI has revolutionalised the world as we know it. From using virtual assistants like Siri or Cortana to fulfil menial tasks online or even having smart AI-driven robots that can perform the most intricate and complicated surgeries, artificial Intelligence innovations aim to make human life much easier. But despite this, there is still a vast majority of people that would rather side with an AI-free world. This is because, as with everything in this world, AI too has its cons along with its myriad of Pros.

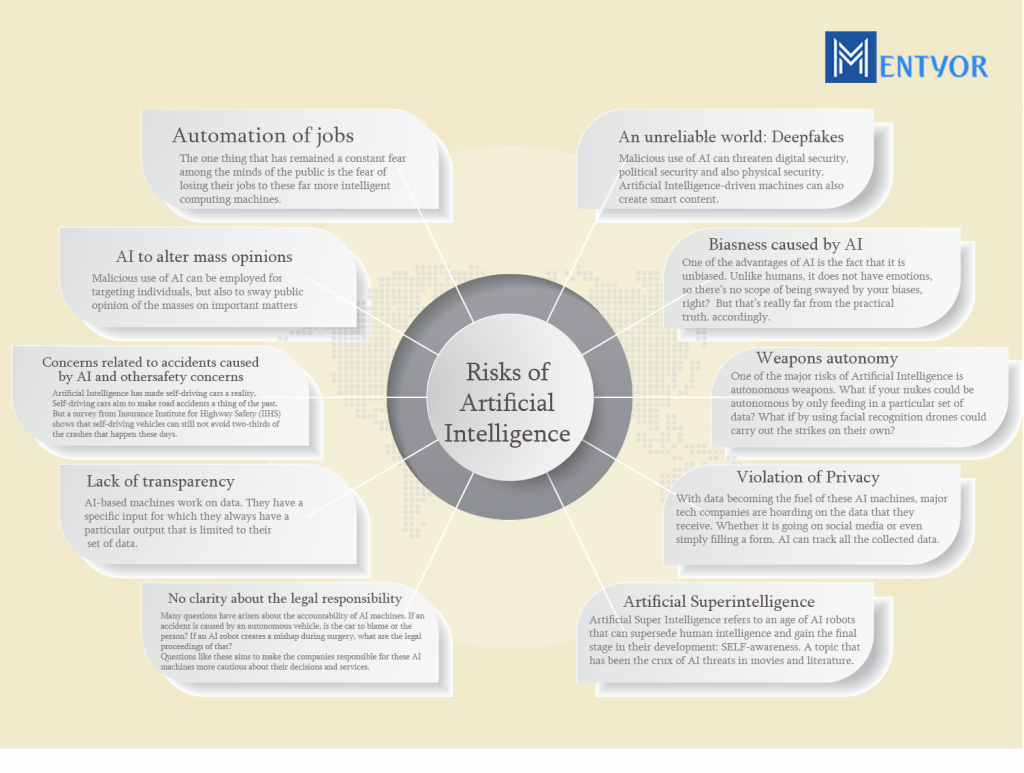

The Risks of Artificial Intelligence are just as many as their blessings. But the point which underlines every risk of AI is the ethics behind creating a super-smart sentient being.

AI-driven machines work based on the big data that is fed to them. They use this data as a reference library for themselves. They learn, adapt, and make sense of the world using this data. This is where ethics come into the picture. The right kind of data must be fed to these machines. With the increasing use of AI in the military, the Automation of weapons by AI-driven beings without human intervention is one of the major causes of concern. As such, there are many other dangers of Artificial Intelligence. This blog aims to shed light on the different dangers of Artificial Intelligence.

Read more about Artificial Intelligence: ARTIFICIAL INTELLIGENCE: AN ULTIMATE GUIDE

Is Artificial Intelligence A Threat? – Risks Of Artificial Intelligence

Artificial Intelligence simply refers to providing computation machines with the ability to impersonate human intelligence and their decision-making prowess. Unlike humans, AI is not fatigued in doing the same repetitive tasks every day. Instead, it can even “learn” to discover more efficient ways of doing the same task. As such AI-driven machines can accomplish the same task that a human does but at a much faster rate and with a more productive outcome.

Not just the common public or the conspiracy theorists, but rather some of the most renowned heads in Science have also shaken their heads in disapproval at AI. Elon Musk, the founder of SpaceX, Tesla and co-founder of Neuralink, is highly wary of the developments in Artificial Intelligence. According to him, He is quite “close to the cutting edge of AI” and it “scares the hell” out of him. He even goes on to say that “AI is more dangerous than nukes”.[1]

In fact, even Stephen Hawking, one of the most renowned physicists to ever exist, has spoken about the unchecked developments in AI. According to him, he fears that AI might end up replacing human beings altogether.

With so many people disapproving of AI, it is worth looking into the threats of Artificial Intelligence.

Risks of Artificial Intelligence

Automation of jobs

The one thing that has remained a constant fear among the minds of the public is the fear of losing their jobs to these far more intelligent computing machines. This is no longer an even hypothetical threat but an actual reality as many machines are placing labourers working with repetitive tasks. The concern no longer is what type of jobs AI will replace, but rather to what degree will the jobs be replaced.

According to a study by Brookins, Automation will affect almost 25% of all American jobs. Which accounts for one-quarter of the total jobs. The driving force behind this automation is because 70% of the tasks – which varies from Administrative to office management, etc – can be carried out by automation.

AI researchers suggest that jobs will be created as many as AI replaces. But the one barrier that would remain is that a higher educational background may be required for these new vacancies created by AI. Since it’s not feasible for every stratum of society, hence the fear and uncertainty surrounding job automation still remain.

Read why Data Science Jobs are in Demand: Top 9 Reasons Why Data Science Jobs Are In Demand

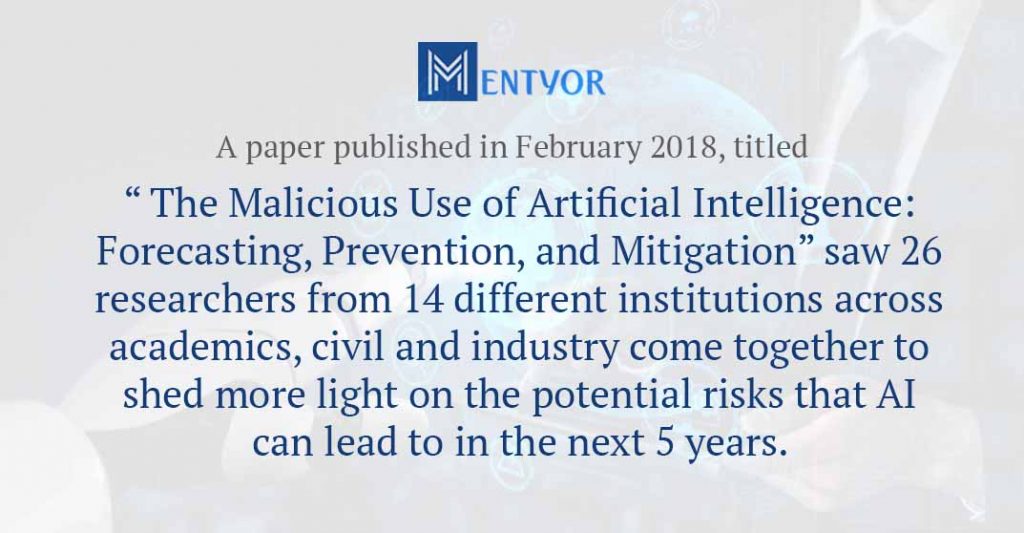

An unreliable world: Deepfakes

Another one of the dangers of AI is related to its malicious use to blackmail or intentionally harm someone. We live in a completely digitized world. More than spending our time in the physical world, we spend more time on the digital one. As such, this gives rise to more propaganda, fake news, manipulated content, etc online. A paper published in February 2018, titled “ The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation” saw 26 researchers from 14 different institutions across academics, civil and industry come together to shed more light on the potential risks that AI can lead to in the next 5 years. [2]

Malicious use of AI can threaten digital security, political security and also physical security. Artificial Intelligence-driven machines can also create smart content. They can construct faces of people that never existed, make clones, manipulate voices and pictures, and even engage in smart advertising such as targeting just the right audience for your business. It’s not always necessary that the manipulation power of AI is used in a righteous or even legal way.

One such example of making malicious content using AI is the software called Deepfake. Deepfake is a type of open software that can manipulate video footage. Editing faces in a moving video was considered to be immensely difficult, hence videos were predominantly used as pieces of evidence. But with the advent of Deepfake, now it is even possible to swap or change faces in a moving video.

For some, it could be something as harmless as making a video with your favourite actors, but applications of this software can get scary really fast. It could be employed to tamper a political campaign by creating fake videos of politicians saying racist, sexist things that aren’t even true. Explicit videos of famous celebrities are made using this software and then used for blackmailing. Thus AI can make things go awry really fast.

AI to alter mass opinions

Malicious use of AI can be employed for targeting individuals, but also to sway public opinion of the masses on important matters. One of the most popular cases where AI was employed for swaying the public’s opinions was by Cambridge Analytica. Cambridge Analytica analysed 87 million profiles and their Big data generated through Facebook to identify voters that could be “persuaded” and swayed to support and vote for their party of choice.

They were able to persuade people into becoming supporters by showing them tailor-made content for them. They analysed their likes and dislikes, their personality to show them exactly the content that would move them. The engineers at Cambridge Analytic built an AI model that could run hundreds to thousands of variations of an Ad to identify which one would suit an individual the best.

Biasness caused by AI

One of the advantages of AI is the fact that it is unbiased. Unlike humans, it does not have emotions, so there’s no scope of being swayed by your biases, right? But that’s really far from the practical truth. One of the recently identified risks of Artificial Intelligence is the biased algorithm. Since an AI system runs on data, it would react according to the data that it is fed. If a researcher feeds it with a racist, sexist or biased point of view, it would also react to situations accordingly.

One example of this is COMPAS, which is a risk management algorithm used by courts in Florida and other states. It is used to analyse the risks or the probability of a defendant becoming an offender again. But a 2016 investigation by ProPublica showed that the algorithm was biased towards African Americans. It had a tendency of flagging Black defendants with a negligible criminal past as a higher risk than white defendants with a more substantial criminal past. [3]

Know what is Supply Chain Management in Business: What Is Supply Chain Management ? [3 Examples + Advantages]

Concerns related to accidents caused by AI and other safety concerns

Artificial Intelligence has made self-driving cars a reality. Self-driving cars aim to make road accidents a thing of the past. But a survey from Insurance Institute for Highway Safety (IIHS) shows that self-driving vehicles can still not avoid two-thirds of the crashes that happen these days. [4]

Apart from this, since self-driven cars essentially run on software, it also has high chances of being hacked. Once it is hacked, the control could be used for ill-intended purposes.

Weapons autonomy

One of the major risks of Artificial Intelligence is autonomous weapons. What if your nukes could be autonomous by only feeding in a particular set of data? What if by using facial recognition drones could carry out the strikes on their own?

As AI becomes smarter, these are the questions being asked related to weapons autonomy. More than 30,000 AI and Robotics researchers have signed an open letter about their disapproval of creating a world full of AI weapons.[5] In their letter, they shine light upon the fact that, unlike nuclear weapons, they do not require expensive or hard to obtain raw materials. So they are comparatively easier to produce in masses. Once the software reaches the black market, it might even fall into the hands of dictators, terrorists and assassins. Therefore, they believe that there are many more ways of making a battlefield safer for civilians than having an AI dominated war.

Lack of transparency – Risks Of Artificial Intelligence

AI-based machines work on data. They have a specific input for which they always have a particular output that is limited to their set of data. As humans, we tend towards believing in the superiority of computers and take every decision that they sprout as the right one. But we are generally left in the dark behind the motivation or thinking process of a machine. Especially when it comes to matters of healthcare and military when even a single decision could be fatal, it is important to know the motivation behind a decision.

Read about Data Science Applications: A Digital World: 12 Amazing Data Science Applications

Violation of Privacy

With data becoming the fuel of these AI machines, major tech companies are hoarding on the data that they receive. Whether it is going on social media or even simply filling a form, AI can track all the collected data, As such privacy in a digital setting is becoming kind of difficult to come across. Tech Giants like Apple is rallying to make privacy more easily available for their users by employing different updates to their algorithms that help them identify the pages collecting their data. They could further even allow for them to use the said data or deny permissions.

No clarity about the legal responsibility

Many questions have arisen about the accountability of AI machines. If an accident is caused by an autonomous vehicle, is the car to blame or the person? If an AI robot creates a mishap during surgery, what are the legal proceedings of that?

Questions like these aims to make the companies responsible for these AI machines more cautious about their decisions and services.

Artificial Superintelligence – Risks Of Artificial Intelligence

Artificial Super Intelligence refers to an age of AI robots that can supersede human intelligence and gain the final stage in their development: SELF-awareness. A topic that has been the crux of AI threats in movies and literature. Given Stephen Hawkings’s warning that “The development of full artificial intelligence could spell the end of the human race”, It is inevitable to be wary of AI developments when a master physicist warns you against it.

Conclusion

Artificial Intelligence is a double-edged sword. It surely does make our life easier but along with it also brings along a host of risks. The dangers of AI cannot be ignored. As the world grows more data-driven and robots become the new assistants, it won’t be too preposterous to take the new developments with a slight grain of salt.

References

[1] Cuthbertson, A. (2020, July 27). Elon Musk claims AI will overtake humans “in less than five years.” The Independent. https://www.independent.co.uk/life-style/gadgets-and-tech/news/elon-musk-artificial-intelligence-ai-singularity-a9640196.html

[2] The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation. (2018). Various Institutions. https://arxiv.org/pdf/1802.07228.pdf

[3] ProPublica. (2020, February 29). Machine Bias. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

[4] Self-driving vehicles could struggle to eliminate most crashes. (n.d.). IIHS-HLDI Crash Testing and Highway Safety. https://www.iihs.org/news/detail/self-driving-vehicles-could-struggle-to-eliminate-most-crashes

[5] Kramar, J. (2021, November 23). Autonomous Weapons Open Letter: AI & Robotics Researchers. Future of Life Institute. https://futureoflife.org/2016/02/09/open-letter-autonomous-weapons-ai-robotics/

WhatsApp

WhatsApp